AI and the Total Destruction of Trust

Generating fake videos, ripping the social fabric, and what comes next

It happened to me. I watched a video on Instagram breaking down how another reel was AI. The only problem was that I had seen the AI video the day before, and hadn’t realized it was a fake. I was shaken; it was a jarring way to realize I had been conned. I thought I was good at detecting these things, but I had fallen for this brand new wave of AI generated videos. And then, over the next day or two, something odd and deeply unpleasant happened — I started having trouble trusting any of the videos I was seeing.

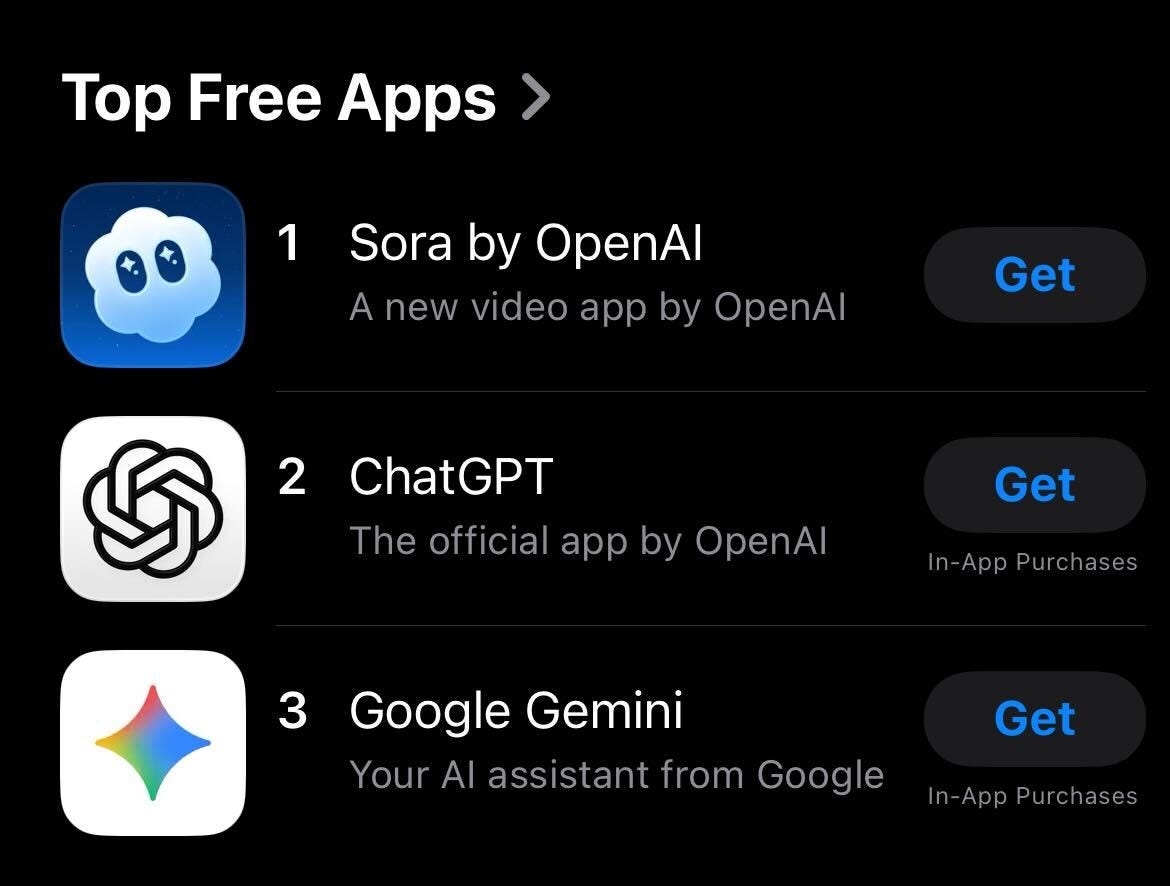

My day job is in social media. I have to be online, all the time, and that means a lot of videos. Half of U.S. adults now get news from social media, and a lot of that is TikTok and Instagram videos. And now with Sora 2 (the latest AI video model from OpenAI) we have a video generator that can very, very easily create immensely persuasive videos that could easily convince most people of just about anything. And millions of people are already using it. Sora is, alarmingly, the most popular free app out there, followed by other AI apps:

In their Sora launch announcement OpenAI didn’t make the case for why their latest technological leap is good. They don’t try to persuade us that generating fake videos is socially beneficial. They just say they’ve improved the tech, which they have. They also tell people to use it responsibly, while knowing full well that won’t happen. The earlier iteration of their app was already used for everything from fake news to non-consensual AI porn of celebrities. Sam Altman (CEO of OpenAI) and the rest of the company know that this new video tech will be used maliciously, and that it’ll be more effective at doing harm — they just don’t care.

The one use of AI video that’s typically touted is the entertainment value. Anyone can make a silly little snippet, you can scroll endless hours of AI generated videos, and so on and so forth. I get the temptation, I like scrolling on the internet as much as anyone. Hell, I probably like it a lot more than most. But when it comes to generative AI, most people don’t seem to know the staggering costs of this seemingly harmless fun.

Generative AI has an incredible price tag. It gobbles up water and energy at an unbelievable rate. Making just one video takes a lot of power:

Our climate, the land beneath our feet, the water that sustains life; AI companies are willing and eager to sacrifice all of these vital resources for their profit margins. Then there’s the jobs in video and graphic design and the film industry that are on the chopping block, all to pad the pockets of the super-rich. Convenience and mediocre slop entertainment just aren’t worth it, especially when there are already a million human beings who love making media that’s fun, smart, interesting and creative, whether in Hollywood or online.

Geoffrey Fowler recently got into some of the dangers of this new wave of AI video. Aside from the costs to the planet and to our jobs, there are immense risks involved with being able to produce high-quality fake videos. As Fowler notes, Sora can take images, like human faces, and incorporate them into these generated scenes. He writes:

“Recently I watched a video of myself getting arrested for drunken driving. Then I watched myself burn an American flag. Then I watched myself confess to eating toenail clippings. None of it happened. But all of it looked real enough to make me do a double take. Friends made the videos using Sora, a new artificial intelligence app from the maker of ChatGPT that’s now the No. 1 iPhone download. I gave them access to my face. I never agreed to what they’d do with it.”

There are theoretically one or two guardrails built in to this nightmarish scenario, the most prominent being that every video generated by Sora has a watermark baked in which identifies it as AI. But, as Matthew Gault notes for 404 media, “Bypassing Sora 2’s rudimentary safety features is easy.” There are numerous apps that let you remove the watermark, and videos without AI markers are already flooding Instagram and TikTok and the rest of the web.

So what can you trust? The alarming answer is that we can trust less and less of what we see online. I don’t want to be a doomer, and in the long term I’m not, but in the short term we’re in for a period of confusion and manipulation. We’re already there, to a significant degree. Lies go viral constantly, social media is already filled with AI slop, and most people have trouble telling the true from the false. So now we’re in for a period of fake images and videos, and compared to what’s come before this next stage is going to be a tidal wave of deception.

It’s already beginning. We’re already seeing what Gizmodo calls “an explosion in realistic AI videos on social media.” Notably, on the MAGA side of the internet, fake protest videos are already running wild. Matt Novak describes videos like one of an “antifa” protester harassing soldiers, and getting pepper sprayed in the face. It is entirely fake, and has 40 million views on Instagram. And that’s just one of countless examples from the last week alone.

Some people will never wake up to the fact that their feeds are filled with AI content, and others simply won’t care. Facebook recommends AI videos constantly, Instagram feeds them to three billion users, and many people appear unfazed by the fact that they’re constantly being tricked. However, we should note that a whole lot of folks out there do care, do give a shit that we’re being conned and bombarded by artificial content. Millions and millions of people see the danger of videos that claim to show real, important events, but are entirely fake, entirely manufactured for clicks and societal manipulation.

It’s vitally important right now that you oppose AI video, and Big Tech more broadly, because at the moment a handful of tech oligarchs are cashing in on AI while ignoring the ecological damage, the economic danger, and the far-reaching risks of sacrificing our grasp on reality. Not only are these billionaires eagerly trading our planet for profit, they’re also trading in our ability to detect what’s real. Shredding our social fabric doesn’t bother them — some are even excited about it.

There is, unexpectedly, at least one potential upside to this cascading disaster. When I realized I’d been fooled by AI content (at least once) the other day, a strange thought came to me. Well, there were two. The first was that I could no longer trust any of these videos. As I watched a funny little clip that was almost certainly made by a human being, I realized that I could no longer be sure, I could no longer trust any of this stuff. The next thought was that maybe it’s for the best. Maybe it’s good for me to just get off Instagram and TikTok. And, one day, maybe it’ll be good for society as a whole to move beyond mind-numbing social media scrolling. Maybe, out of this mess, will emerge a different way of relating to media and journalism and art.

Now I’m an optimist, but not so much so that I think this backlash against AI will be swift and decisive. I think it’ll come in fits and starts. People, like myself, will be furious that we can no longer trust what we’re seeing. While millions make and consume silly videos that consume resources and provide nothing significant, a rising tide will push back. People are already protesting the construction of data centers in their communities, halting the physical manifestations of the AI boom. And now it’s time for the backlash against its detrimental societal impacts to also accelerate.

None of us want a world without trust. We don’t want a world where everything must be second-guessed, fact-checked, questioned. Part of the ubiquitous mental instability, anxiety, and nihilism in our world is a lack of trust — in institutions, in media, and ultimately in one another. AI is multiplying this problem exponentially. We can’t trust AI videos, and now we can’t tell what’s AI.

In the universe of Dune (those bestselling books and blockbuster movies) humans have rejected AI. By the time the books occur humanity has already gone through trials and tribulations around artificial intelligence, ultimately choosing to rid themselves entirely of computers that are capable of thought. As the Dune Wiki says:

“The Butlerian Jihad … was the crusade against computers, thinking machines, and conscious robots. As mankind spread throughout the known universe, technology advanced and eventually machines were made that would make decisions for people. This propelled the creators of these machines into a new technocratic class, effectively controlling the worlds of the common people. Mankind eventually rebelled against these machines and their creators in a nigh-religious war that sought to retake the thinking soul of mankind from the gods of machine logic.”

In the aftermath of this particular holy war humanity decided that people ought never be replaced by machines. Now, in our universe, machines haven’t reached this stage of real thinking, even though AI boosters want you to think they have. But we are at the point where these computers are taking jobs, replacing critical thought, and eroding our trust in every image and video we see. AI is increasingly hurting the very fabric of society, all while enriching a handful of people who are eager to replace any semblance of democracy with techno-feudalism.

Regulation, both of the technology itself and of the billionaires who control it, is imperative. It’s past time we realize this generative AI stuff isn’t worth it, and past time to create a society where a handful of oligarchs can’t drag our species towards collapse. Is it worth building data centers that consume as much power as New York City to generate dull, semi-humorous videos? Is it worth sucking up drinking water to make fake protest clips? Is an artificial video of a dog walking on two legs really worth destroying our trust in reality? - JP

It's actually less scary that AI videos will exist to trick people and more scary that people will watch a genuine video you send to them of war crimes, their favorite politician saying psycho things, etc. and then those people will react by claiming that what you're showing them is AI generated. It's the forever excuse for nationalists to cheer for "their team" while "their team" participates in the indiscriminate destruction of innocent human lives.

Wow, so much here. Thank you for putting all this together.